The simplest ML model: reading my own handwriting

✍️ Recognizing handwritten digits; Extracting wisdom from the MNIST data set; Machine Learning is a pretty fancy term for what we're going to do

I like writing by hand.

Journaling on paper is relaxing. The thoughts flow easy. Seeing the initially blank sheet progressively fill up with thoughts is satisfying.

What is not satisfying is trying to decipher my own squibbles 🙈. What it would take to write a program that can recognize handwriting?

The mother of all data sets

How we’re going to do this? Of course, Machine Learning 💫.

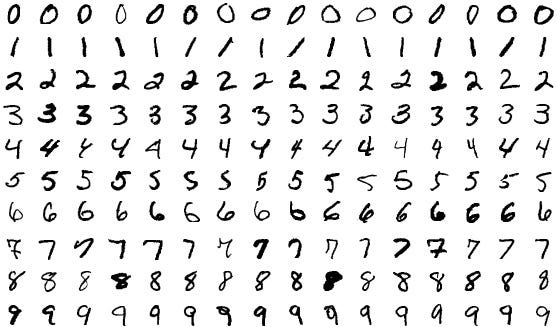

Machine Learning is about computers learning from examples, so we’re gonna need some examples. MNIST is a classic data set of handwritten digits. It was compiled in the 1990s, and has been used to evaluate image recognition algorithms ever since.

MNIST contains 60 000 training images. These pictures are already labelled with the digit that they represent. We can use these examples to train a model that will later recognize handwritten digits it hasn’t been trained on (specifically, my own handwriting :)).

Squeeze the data, make lemonade

Let’s just build a model that can distinguish just 3s and 7s.

Building a model means finding a way to extract relevant information (relevant to the problem we want to solve) from a bunch of examples.

In this case, each example is a square grid of 28 pixels by 28 pixels:

How do we convert a collection of pictures representing the digit “3”, into one model that represents the knowledge about 3s accumulated in all these examples?

The method of creating the model will determine how effective our system will be. For a good modern system, we’d use convolutions to train a neural network on the data set, similar to what we saw a few weeks ago (part 1, part 2). But today let’s look at a method that does not use neural networks at all, illustrating that Machine Learning can be in fact very simple.

For our model, we will just go pixel by pixel, and compute the average pixel value in each location, across all pictures representing the digit “3”, and all pictures representing the digit “7”.

What we get is an “idealized” model of what 3s and 7s look like in general, based on all of the examples in the data set. The models are fuzzy, but that’s the point – the models need to match a variety of different writing styles.

Telling them apart

Now that we have the models, let’s give them a try on my handwriting!

After cropping and fixing the resolution, we get something we can compare with the models.

True to the spirit of simplicity, we’re going to compare the pictures with the models using simple pixel-by-pixel difference score. We will use the mean square error formula to give bigger weight to bigger pixel differences.

That seems to work:

✅ For my handwritten 7, it gets lower error when compared with the model 7 (3985.131 as opposed to 2346.122)

✅ For the handwritten 3, it gets lower error when compared with the model 3 (2413.402 as opposed to 3003.247)

🥳

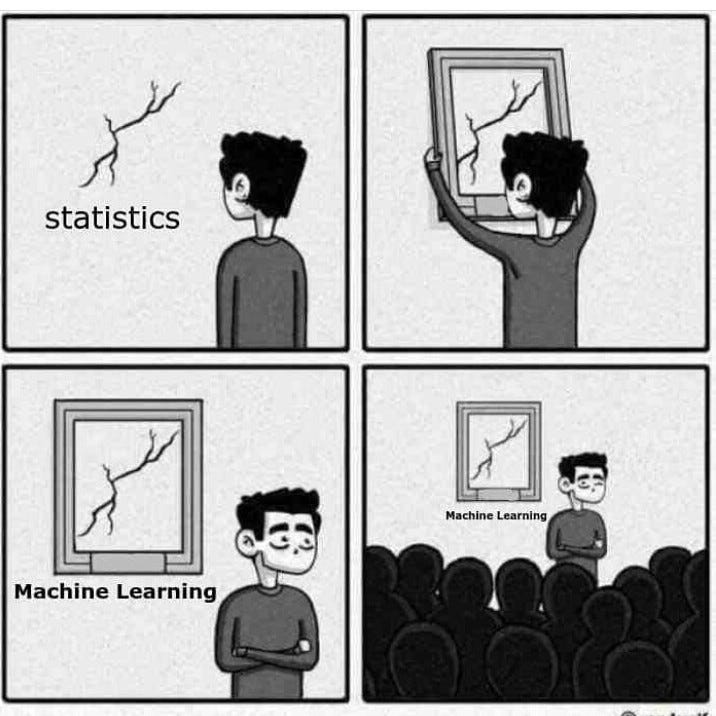

Wait, isn’t this just statistics?

We call this Machine Learning, but all we did was to average a bunch of numbers.

Isn’t this just statistics? (or even just arithmetics?)

Well, yes.

More on this

📋 Kaggle notebook with all the code

📖 Chapter 4 of the fast AI book

The MNIST data set we used today is the same data set that Jeremy Howard mentioned in the quote we saw last week: Fundamentally the stuff that’s in the course now is not that different from (..) what Yann LeCun used on MNIST back in 1996.)

In other news

✨ Google DeepMind announced a new family of models with radically bigger context windows. In a future edition we will look more into what it means 💫.

🤖 OpenAI is adding memory to ChatGPT. Last call to start being nice and courteous when talking to the AI.

⛏️ Nvidia, the leading manufacturer of GPU accelerators, is now worth more than Alphabet and Amazon. During the gold rush, it’s nice to be the main shovel shop in town.

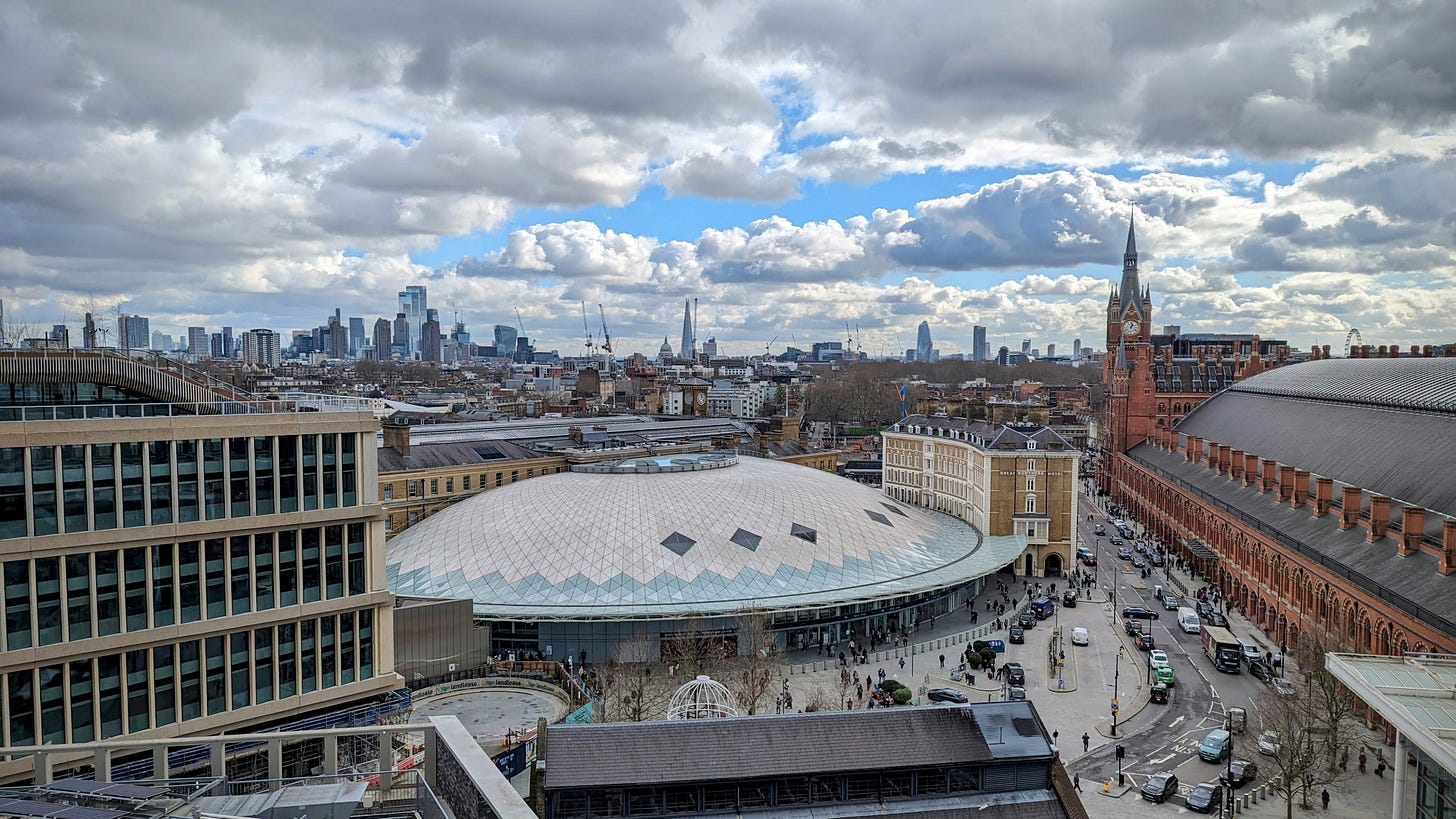

Postcard from London

Heading to London for the weekend. Excited to see Tommy Wiseau (the mad genius behind the movie The Room); and to catch some Space Invaders 👾.

Have a nice week,

– Przemek