Seeing like a neural network: chihuahua or blueberry muffin

Blueberries and eyes, 300 million neurons, 3x3 masks are all you need

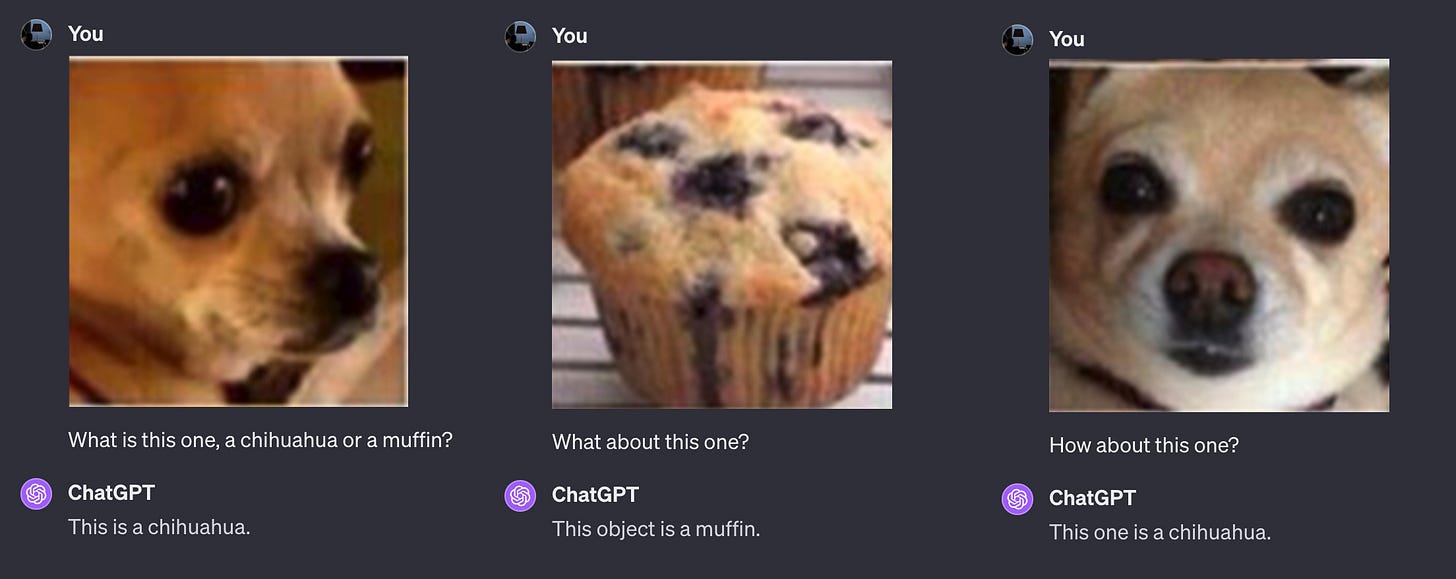

Can you tell apart a cute blueberry muffin from a glossy-eyed chihuahua?

The two can look deceptively similar (especially once you crop out the chihuahua ears :)), making it a good test for image recognition systems.

Blueberries and eyes

For the longest time, image recognition was considered easy for humans but very hard for computers. Just take a look at the picture below, and marvel how easy it is for you to tell apart blueberries from chihuahua eyes:

Image understanding is easy for humans, because a lot of our brain machinery is dedicated to processing vision signals. Seeing a single face activates up to 300 million neurons in the visual cortex. That’s a lot of neurons, and evolution had millions of years to perfect how they’re wired together.

Computers, on the other hand, are not inherently built to solve image understanding problems. They’re built to add and multiply numbers very fast. The only abstract tasks the computers can handle are those that we humans managed to translate into simple number crunching.

So how the AI systems of today recognize chihuahuas?

Looking for masks

Last week, we saw how computers solve the simplest image recognition problems: looking for edges in a picture of a space invader. The key is to use a “mask” (also called “convolution”) representing the pattern we look for, and then compare it with the pixels at every part of the image.

Example mask representing a stripe of dark pixels surrounded by brighter pixels:

(We won’t repeat here the details of how the convolution masks work. If you missed the last edition, you can catch up here . 💫 )

Small masks ➡️ complex objects

To move from recognizing simple patterns to increasingly complex objects, we can start applying the masks hierarchically. We start off looking for small masks in a big picture. Then, we progressively make the picture smaller by reducing the resolution:

OK, but how do we know which sequence of masks will produce a system that can recognize specifically chihuahuas?

Learning the masks

The big bang breakthrough of image recognition was the idea that the masks can be learned from data using neural networks.

Instead of hand-designing the right masks to represent various objects , we do two things:

🎲 set all of the masks to random values

✨ use a big data set of labeled images to tune the neural network. As we test the neural network on the images, we keep tuning the numbers in each mask to yield progressively better results. (This uses the same gradient descent method we saw when predicting survival of Titanic passengers.)

Seeing like a neural network

In a classic Deep Learning turn of events, we first saw that this method works, and only later how it works.

In 2013 Matt Zeiler & Rob Fergus published a study that explains what sort of masks are “learned” by each layer of the neural network, and what type of pictures can be recognized at each level.

The first layer learns how to recognize gradients and simple edges:

The second layer can recognize pattern, stripes and circles:

By the time we make it to layer 5, the network can recognize dogs and bicycle wheels!

Limitations

There’s limit to everything! While GPT4 seems very good at recognizing objects in a specific single picture, it seems to struggle navigating composite images. Here it didn’t even get the number of objects right:

It wouldn’t be too hard to write a preprocessing step that first cuts the initial picture into smaller images, then feeds each picture into the image recognition module, and finally assembles the final results. ChatGPT is not doing this either for simplicity, or to avoid the compute cost of making multiple image recognition requests.

In other news

📝 Diversify out. Louie Bacaj on tech workers pivoting their careers away from the big tech. (A topic Louie knows well from his work in the Small bets community.)

🔉 If books could kill. My newest podcast discovery, two guys roasting popular non-fiction books. Great supporting material for Read fewer books.

📝 The man who carried computer science on his shoulders. Remembering the pioneering Dutch computer scientist who once tried and failed to register his employment as a programmer: the municipal authorities of the town of Amsterdam did not accept it on the grounds that there was no such profession.

Postcard from Paris

Paris celebrates Saturday with light, sound and a floating medusa made of a curtain propped by an umbrella.

Asked to describe the picture, GPT4 has this to say: The image depicts an indoor scene with a vibrant atmosphere, likely a party or concert, taking place in a space with a curved ceiling, reminiscent of an underground tunnel or an industrial venue. Not bad 💫 !

Have a nice week,

– Przemek

Thanks for the mention Przemek! And, I love the theme "chihuahua vs blueberry" who would've thought they'd be that difficult to tell apart :)

And congrats on your streak of publishing, love to see it, awesome stuff!