Basic ideas are forever, everything else is tweaks

🚀 Catching up with Deep Learning; putting in the hours; life choices and self-defeating goals

Every new thing brings about the anxiety of being late to the party.

“Is it too late to learn about Deep Learning?” has been one of the top questions in AI-related forums over the last year. (Along with the other great classic: “How to get stable diffusion to generate NSFW images?”)

The hours

Is 35 too old to start with machine learning?

Whether we’re “too old” to learn something is almost always the wrong question.

The right question is: what sparks your interest? And then: are you willing to put in the hours to learn about it?

With the right materials and teachers, it doesn’t take too long to learn anything.

To best way to find out if deep learning (or any other field or skill) is right for you is to dive in and practice. In 10 hours you can play the first song on a guitar, build the first website, fine-tune a deep learning model.

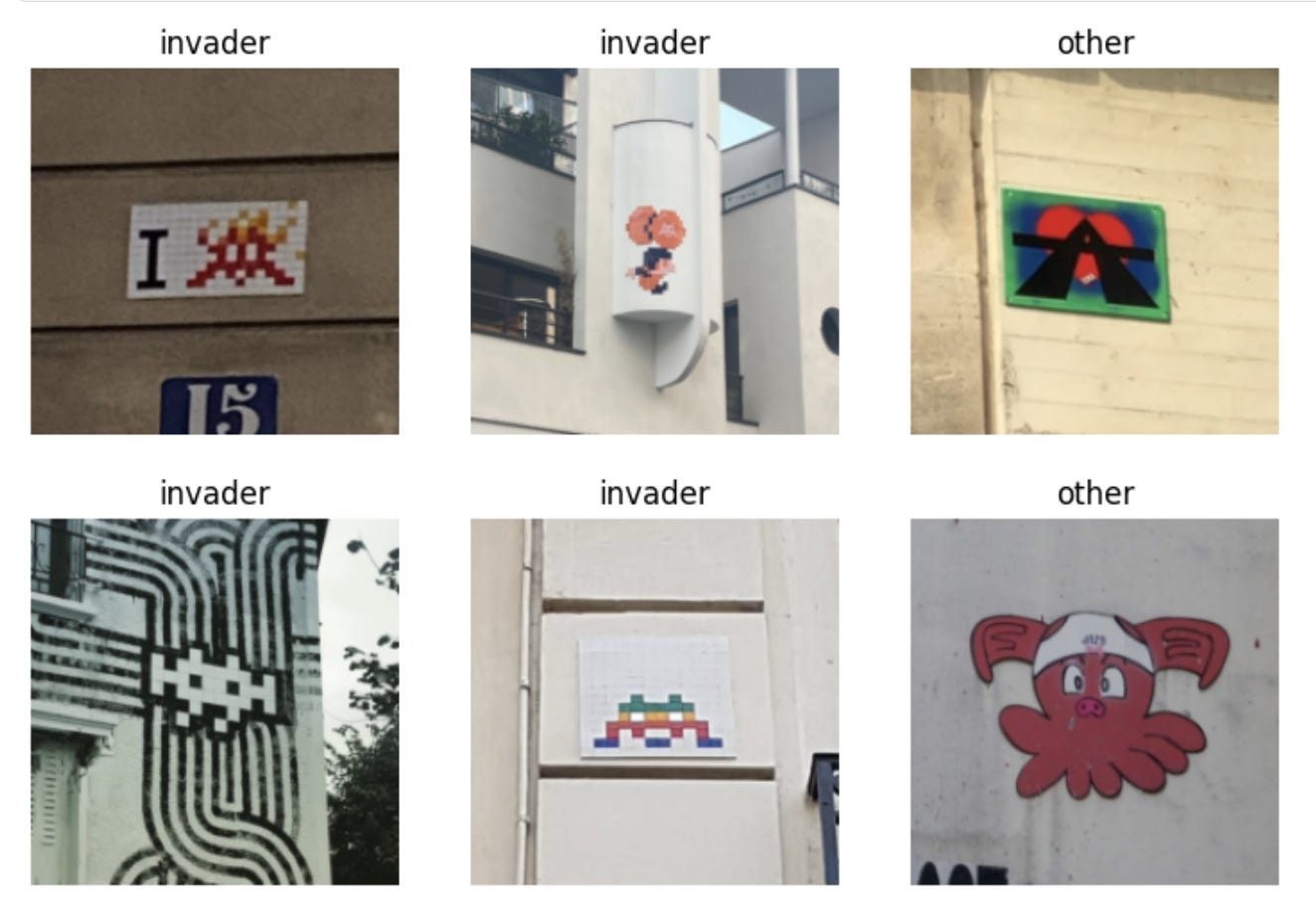

You can spend 10 hours on the first few lessons of PDL4C (a free and beautiful Deep Learning course) and see how it goes. If you already have some elementary background in programming, you’ll get to building end-to-end systems (like the Parisian street art classifier we saw a few months back) in just a few hours.

Getting to proficiency takes longer. PDL4C takes maybe 100-200 hours to study thoroughly and finish. But 10 hours is enough to get a taste and see if you want to continue.

Basic ideas are forever, everything else is tweaks

But AI moves too fast, I’ll never catch up!

Underneath the relentless media cycle that keeps heralding AI breakthroughs, the core ideas and techniques don’t actually change that much.

Jeremy Howard puts it this way:

Things are not changing that fast at all. It can look that way because people are always putting up press releases about their new tweaks.

Fundamentally the stuff that’s in the course now is not that different from what was in the course 5 years ago. And it’s not that different from the convolutional neural networks that Yann LeCun used on MNIST back in 1996.

The basic ideas are forever. Everything else is tweaks.

Status goals are self-defeating

OK, but I see amazing people being being so good at AI, I will never catch up with them!

Well maybe, but what’s wrong with that?

Comparing ourselves to others is self-defeating: there’s always someone better at something, so we’re guaranteed to never be satisfied. More importantly, such comparisons are distracting us from the real challenge: studying the field, building cool projects and making friends along the way.

So go easy on arbitrary long-term goals of accomplishment and status: a little bit can keep you motivated, but fixating on them is self-sabotage.

In other news

🎵 It doesn’t matter where we are, together we can go far – Curtis Harding

🤖 Bard is now Gemini. Google Gemini model is now available in Europe, and the experimental chat interface that used to be called Bard is now simply called Gemini as well. Jack Krawczyk posted photos from the launch room.

🗼 Parisians vote to triple parking fees for SUVs. Paris is steadily becoming more and more friendly to pedestrians and bicycles, and increasingly hard / expensive to navigate in individual vehicles. As an avid bike-rider, I like it 👏.

Postcard from Paris

Quartier Martin Luther King looking dramatic 👀. Home to ducks, geese and a railway line that’s supposedly still live, but I never saw any trains on it.

Have a nice week 💫,

– Przemek