Watch it learn: a neural network training on Shakespeare

💫 We advance from the 60s to early 2000s; A neural network reads all of Shakespeare; "Romeo be heard and this mighty ears twill"

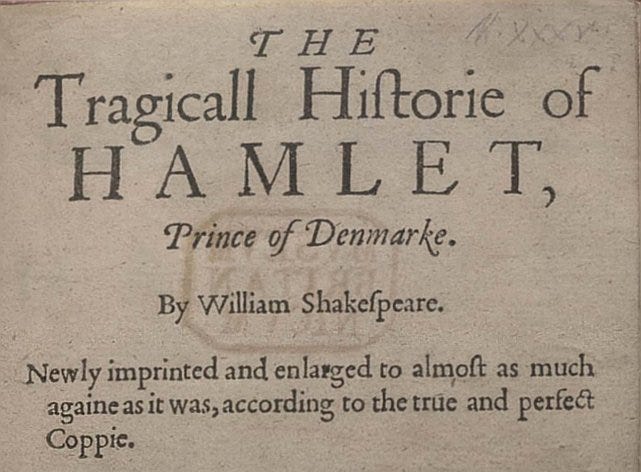

Last week we built a simple language model and trained it on the complete works of William Shakespeare. Our method wasn’t very effective: the model was using only one word at the time to predict the next one and even then it was barely fitting in the computer memory.

Today we will jump from the 60s to early 2000s: we will use a neural network. The task stays the same: learn English from the complete works of Shakespeare and then generate some example phrases.

The architecture

The landmark paper on using neural networks for language modelling came in 2003.

The architecture proposed by a team of researchers at University of Montreal consists of three layers: the input, the hidden layer and the output:

The input

The input to the network is the “context window”: that is, the few words that come before the word that we want to predict.

For example, if we’re training the model with the context length of 3, we would be showing it samples consisting of three words at a time, along with the word that followed them:

[‘to’, ‘be’, ‘or’] → ‘not’

[‘be’, ‘or’, ‘not’] → ‘to’

[‘or’, ‘not’, ‘to’] → ‘be’

The hidden layer

The hidden layer is the part of the network that “learns” the language when the model is trained.

It consists of an arbitrary number of “digital neurons”. In the chart they are represented as yellow circles. But what is more important than the circles are the arrows: each arrow represents a multiplication. The data flows from top to bottom, numbers representing words are multiplied by weights stored in the arrows.

During training, the model is used to make predictions of the next word that should follow. These predictions are then compared to the actual next word that followed, and the weights are adjusted to make the correct prediction more likely.

The output

The output of the model is a full probability distribution over all the possible words.

For example, given the input [‘or’, ‘not’, ‘to’], the model could come up with the prediction that looks like this:

(Here' we’re showing only the most likely 20 words, the output is actually a probability distribution over all the possible words in the dictionary.)

This is looking pretty good: the model feels that “or not to be” is the most likely completion 🥳.

Watch it learn

Watching the neural network progressively improve its grasp of language is an eerie experience.

Initially, after only a few cycles of training, the probability distributions for all input sequences are still basically uniform. The produced text looks like we’re throwing darts at the dictionary:

wiry hurts mourns met hizzing craftily playfellow be beaks conditions is applauding easiest tiddle dismissing pieces jenny

After 250 cycles, some structure and rhythm start to emerge:

report artemidorus forc ipswich gaze semblative

if unripp weeding resides bouts chamberers , and

show , as ! a breech will do

in my thee the concur

And after 5000 cycles we start to stumble upon nuggets that look like reasonable English phrases:

romeo be heard and this mighty ears twill

bawd , emperial ! ; steal it

cry heavens dost successful i say

children scattering , sir

Compared to ChatGPT or Gemini this isn’t impressive… until we realize that this network was trained on 5 MiBs of data (this would fit on a few floppy disks!), and the training takes 1 minute on a laptop.

Conclusion

A neural network seems to be a better approach than the bigram counting that we tried last week: we can take more than one word at a time as context, and the neural network fits in the computer memory.

More importantly, this approach is scalable. We can increase the context window and almost all of the code remains unchanged. We can also improve the architecture: indeed, the transformers architecture used by modern LLMs uses a few additional techniques that we will look at in future editions 💫.

More on this

⚙️ source code

📝 the paper by Yoshua Bengio et al. [2003]

🎞️ video lecture by Andrej Karpathy. What he describes is the same approach but at character level, not word-by-word.

In other news

📖 book recommendation from Ben Evans: This time is different. That’s what everyone says at the top of a bubble. The rules have changed, gravity doesn’t apply, and new multiples and new expectations apply, until they don't.

📰 Gary Marcus on the “great AI retrenchment”: It was always going to happen; the ludicrously high expectations from the last 18 months were never going to be met. LLMs are not AGI, and (on their own) never will be; scaling alone was never going to be enough.

👾 I upgraded my map of space invaders to automatically cluster markers at higher zoom levels. This makes the map scroll smoothly even with many markers in the field of view (hello Paris with its 1500 mosaics :)).

Postcard from Paris

Parisian evenings in June are very long and can be very charming. This year they are also exceptionally rainy so every nice one feels special.

Here’s to nice evenings 💫,

– Przemek

😳

So I only just realised that predictive text is kinda of a precursor to LLMs.

I also am getting better at referring to LLMs as such, and not just AI (an umbrella term that seems to not really mean anything). Why is the rise is “AI” image manipulation occurring at the same time as LLMs? What do the two have in common, if anything?