Speak to me in Shakespeare: building a tiny language model

🖋️ Distilling the complete works of Shakespeare into a 2GiB table of word pairs; "Elsinore, I swear he heard the senate", said the language model.

Language models are fascinating. How do computers, the glorified calculators that they are, learn the structure and the meaning of human language?

Let’s start looking at this question today! To begin, we’ll build a small language model that can produce text resembling Shakespearean prose.

Goal

We want to build a small language model. We will make it as simple as possible, but it will be a real machine-learned model: trained from scratch on the complete works of Shakespeare.

To keep things simple, our initial model will not be using neural networks: we will use a simpler technique based on something we all learned in elementary school: counting.

Counting pairs

First, we will download a text file with the complete works of Shakespeare. Then, we will go through the entire file and look at all pairs of subsequent words. For every such pair of words (w1, w2), we will take note that w2 appeared after w1.

For example, training on Shakespeare’s Sonnet nr 1, we’d first note that “from” was followed by “fairest”, then that “fairest” was followed by “creatures”, etc.

This is pretty easy to implement: we just need a big array of numbers representing all the different word pairs in our language (in this case, in Shakespearean English).

If we were only training on a snippet of Sonnet 1, the table used for counting would could look something like this. We can see that “from” was followed by “fairest” once, and then “fairest” was followed by “creatures”.

The complete works of Shakespeare

We can use the complete works of Shakespeare compiled into a single text file by Project Gutenberg.

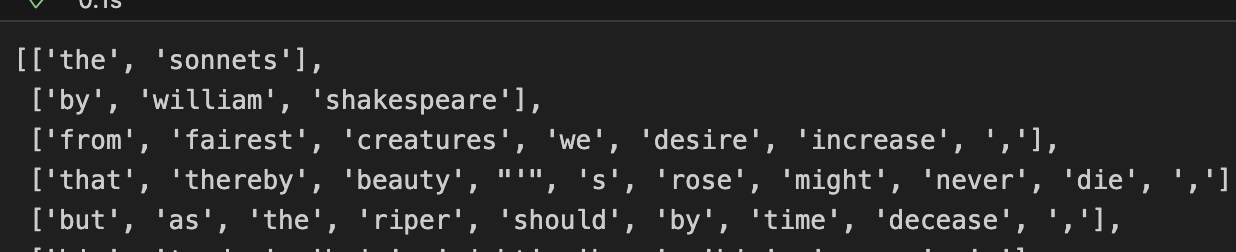

The first thing to do is to tokenize the input. That is, we need to break up sentences into individual “tokens”: in our model, tokens will be individual words and punctuation marks.

While at it, we will also convert all words to lowercase. The pre-processed input looks something like this:

Let’s also add a special sentinel token at the beginning and at the end of every sentence:

Before we start counting, there’s just one more caveat to worry about: will the table of counts fit in the computer memory?

> words = set(word for sentence in lines for word in sentence)

> len(words), len(words)**2

23659, 559748281We have 23 659 different words in the complete works of Shakespeare. Our table will have 23 659 rows and 23 659 columns, so we need to store (23 659)^2 numbers, that is 559 748 281. Assuming 4 bytes per number that is 2.09 GiB . A lot, but should fit on most laptops 💫.

The counting runs in under 10s on the CPU:

Inference

Now that we have the counts, our model is “trained”. (In fact, the big table of word pair counts is the model.) But how do we use it to generate text?

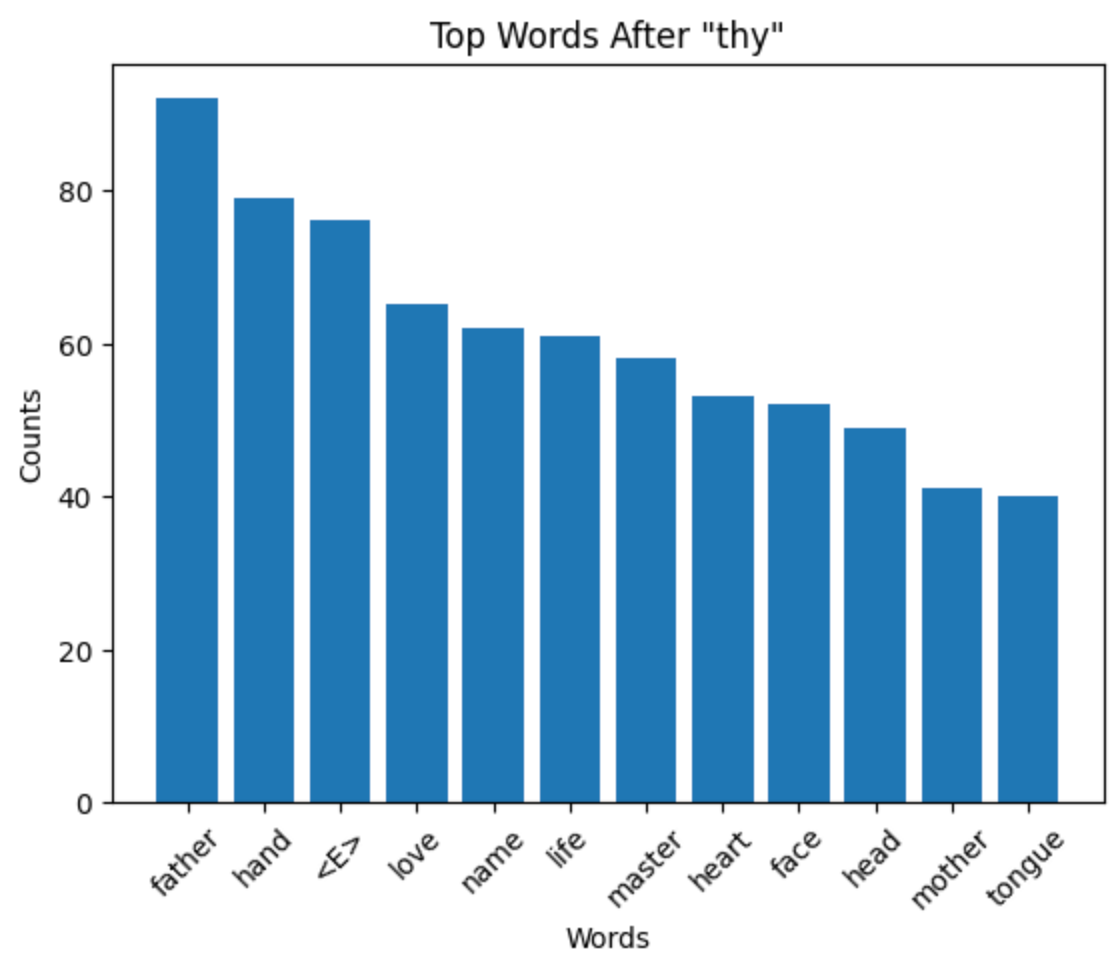

For any current word w, we can look at the corresponding row of the table and retrieve the counts of all the words that follow w in the training data set. For example, here are the words that most commonly come after “thy”:

The key insight is that we can interpret those counts as a probability distribution, and then use it to randomly generate the next token. (Here randomly means: randomly using the given probability distribution).

With this in mind, we can simply start at the sentinel token ‘<S>’ and then keep genering the next token until we land on ‘<E>’.

Results

What does it give? A lot of Shakespearean gibberish, of course:

caesar , my father and there were in weightier judgment by this repays

send the high gods have store - i told us .

But some of what it produces looks eerily legit:

elsinore . i swear he heard the senate

ugly form upon our means and true nature

so sad and pays for a deed of envy

How to improve these results? The first thing to try would be to increase the context that the model uses to pick the next word. But we’re hitting an architectural limitation here: we cannot simply move from counting pairs to counting triplets, because the resulting table wouldn’t fit in computer memory.

(What can we do about it? The answer in a future edition 💫)

More on this

⚙️ Source code on Kaggle.

🎞️ A similar model (but character-level) explained in Andrej Karpathy’s video

In other news

💰 Elon Musk’s xAI raised $6bn to build their own LLM. How many of those large, foundational, expensive to train models can be financially sustained in the long run is one of the open questions of this genAI boom.

💡 Ben Evans newsletter: There is definitely a growing split between the view deep inside AI companies and Silicon Valley that generative AI is Everything, and a view forming outside that this stuff is certainly very important, but isn’t necessarily very useful, yet, (…). Roughly half of people who've tried ChatGPT never used it again.

👀 Apple WWDC is coming up on Monday, and everyone’s eyes are trained on the expected generative AI announcements

Postcard from Clermont-Ferrand

Paragliders taking off from Puy de Dôme, the extinct volcano towering over Clermont-Ferrand.

Have a great week 💫,

– Przemek

A most intriguing matter. I doth await with great anticipation the ensuing discourse.