Magical, but not magic: peeking inside a neural network

💡 Amy, Bob and Clara are neurons; and each of them has a different opinion about the Titanic disaster

Neural networks are the wizardry of the modern age 💫. They can do magical things (ChatGPT, Midjourney, Google Translate, …) and no-one seems quite sure about how they work.

I mean, we sort-of understand how they work: they are made of “digital neurons”, they are trained on large amounts of data. But how do they really work? What sound do they make when you gently squeeze them?

The explanations I could find on the Internet are either very vague (“it’s just like neurons in a brain, but digital”) or waaay more complex than necessary: if you Google “The simplest neural network”, the first result talks about sigmoids 🙈.

So for today I put together what may be the world’s simplest, distilled-to-the-basics, explainable neural network. It features three characters: Amy, Bob and Clara. They are digital neurons, and each of them has a different opinion about what happened on the Titanic 💫.

Titanic passengers

We’re going to make a neural network that predicts the survival chances of Titanic passengers. (A topic we saw before.)

To keep the example really simple, we’re going to look at just two pieces of information about each passenger: their age and sex. Each will be a numeric value:

age: a number between 0.0 and 1.0:

1.0 represents the oldest age found among the Titanic passengers. Everyone’s age has been proportionally scaled to fit the range from 0.0 to 1.0

sex: 0.0 for male and 1.0 for female. (Gender is not binary but the relevant data in the Titanic data set is.)

The panel of experts

The neural network will have three neurons: Amy, Bob and Clara.

You can think of them as a panel of experts we invite to predict survival outcomes of each passenger. For each expert, we’re asking them to provide a number close to 1.0 for passengers that are likely to survive, and 0.0 for passengers that are likely to perish.

Amy thinks that women are more likely to survive, regardless of their age. So she makes her predictions using the formula:

Amy’s prediction: (0*age) + (1*sex)

This boils down to predicting 1.0 (survival) if the passenger is a woman, and 0.0 (demise) otherwise.

Bob is a hopeless optimist, he predicts that everyone would survive regardless of the data. Just like the experts we see in the media, our experts are not necessarily very good :).

Bob’s prediction: 0*age + 0*sex + 1

Clara thinks that children and women are more likely to survive and she uses both pieces of data in her predictions, giving them equal weight:

Clara’s prediction: -0.5*age + 0.5*sex + 0.5

Prediction by committee

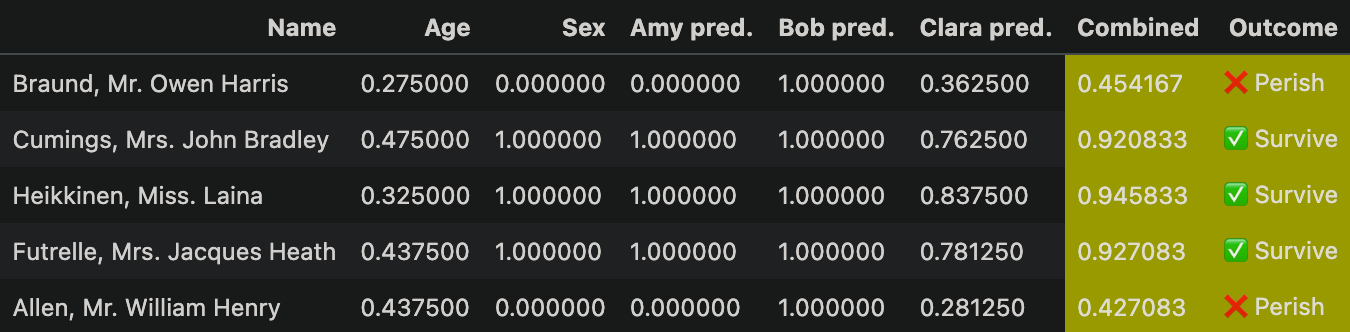

For the first passenger, Amy thinks he will perish, while Bob predicts survival. Now we’re in a classic real-life situation: we have multiple experts and they don’t agree 🤷. We can come up with an estimation of how much we trust each expert, and combine their opinions into a weighted average:

combined prediction = 1/3*amy + 1/3*bob + 1/3*clara

Values >= 0.5 indicate a prediction of survival, less than that indicates a prediction of demise. We note the resulting prediction in the “Outcome” column:

Neural network

What we just made is, in fact, a neural network. Amy, Bob and Clara are three digital neurons. They’re connected to the input data, apply a transformation on it and produce a value. At the end we combine their outputs into a single value and use it to make predictions:

Yes, this example is very simple and silly 🤡. But now that we see how it works, we can better explain the more interesting aspects of neural networks.

How to train a dragon

In our example, we completely made up the formulas that Amy, Bob and Clara use to make predictions, and also the final formula that combines their predictions.

Here’s the first fun fact about neural networks: it actually works like this in real life 💫. Training the neural network starts with random values assigned to each value (like the numbers “0” and “1” in Amy’s formula). Then, the network is “trained” on data to find better values.

What does it mean to “train” the neural network? Unlike the experts on the media, Amy, Bob and Clara are happy to change their opinions :). To train the neural network, we calculate the outcome for data where we already know the right answer, and then tweak the parameters (ie. the formulas that Amy, Bob and Clara use) to better match the expected outcome.

When the training goes well, the quality of the predictions improves with time:

In this extremely simple example, I trained Amy, Bob and Clara on 700 passenger data in the Titanic data set. The neural network quickly learns to just predict “Doesn’t survive” for almost all passengers, which allows it to reach accuracy of about 60% (most passengers indeed didn’t survive).

From three neurons to ChatGPT

How do you get from a tiny network like this to something that can power ChatGPT?

For one thing, you’re gonna need a bigger network. The size of a neural network is measured in the number of parameters. Remember the formulas each neuron used to predict survival outcomes?

Clara’s prediction: -0.5*age + 0.5*sex + 0.5

Each of those numeric values (-0.5, 0.5 and 0.5) is a parameter. In total, our example network has 12 of them, 3 per each neuron and then 3 for the final output formula.

For comparison, a “small” 2023 state-of-the-art open source model by Mistal AI has 7 billion parameters (583 million times more :)).

The structure of the network matters too. The famous “transformers” architecture that powers LLMs like the one behind ChatGPT looks like this when visualized. The matrices represent data flowing through the artificial neurons.

The final difference is a bit of fancy math we skipped here. It makes it possible for the neural network to model the complexities of the problem we’re solving (activation functions) and for us to find the right way to tweak the parameters (gradient descent). But the main point of this post is this: you don’t need to understand any of this to get the basic mechanism of what neural networks are.

It really is just Amy, Bob and Clara and us fiddling with the parameters of their formulas to best predict Titanic survival outcomes 💫.

In other news

🎞️ A 5-minute video showing a bit more realistic application of a bit bigger network (with more layers than the 1 layer of “neuron experts” in our example).

🤖 Google DeepMind announced Gemini, a family of multimodal models with state-of-the-art performance. The stunning demo video generated some follow up discussions. Gary Marcus comments: Friends don’t let friends take demos seriously

🎲 Meta released Cicero, AI for playing Diplomacy the board game. I miss playing board games.

Postcard from Paris

Days are short and rainy in Paris. The conditions for reading and writing and staring into the void remain perfect. I think I’ll start a poi spinning group at work to boost the mood 🤹.

Have a great week 💫,

– Przemek