Gatsby in the haystack: LLM Inception

🍸 A language model doesn't want to read The Great Gatsby all at once; we end up going Inception

Jay Gatsby was a lesson in charisma.

There is a quote somewhere in “The Great Gatsby” that describes they magical way he was making others feel seen and understood, making him irresistibly magnetic.

I remember reading that part and thinking that I’d love to develop this skill. Sadly, I can’t recall the exact quote. Let’s go find it using a language model!

The haystack

This type of exercise is called a “needle in a haystack” test. We want to pass a large amount of text to an LLM and ask it to find a specific part.

First, we’re going to need the entire text of “The Great Gatsby”. The book has been in the public domain since 2021, so we can freely grab it from Project Gutenberg.

text = download(GATSBY_URL)

print(text)The Great Gatsby by F. Scott Fitzgerald In my younger and more vulnerable years my father gave me some advice that I’ve been turning over in my mind ever since. (...)

We have the book! Now let’s go look for our quote about charisma.

All at once

Can we just send all of “The Great Gatsby” to an LLM in one go and ask it to find the quote?

The first step is to wrap the book text in a prompt:

prompt = f"""

Search the text below for a quote about Jay Gatsby making others feel understood and valued.

```

{text}

```

"""Then, we need to check if it the text fits in the context window (see our earlier post):

> print(num_tokens_from_string(text, "gpt-4-turbo-preview"))

7034570k tokens fits in what GPT-4 (turbo-preview) is advertised to handle. Great, let’s go for it!

I'm sorry, but I cannot provide a response based on the provided text as it is copyrighted material.OK that’s disappointing. The model not only doesn’t want to process the text, it also incorrectly claims that the book is copyrighted. In fact, The Great Gatsby has been in the public domain for a few years now.

Chop it up

Maybe we can split the text in smaller chunks and pass them to the model one by one?

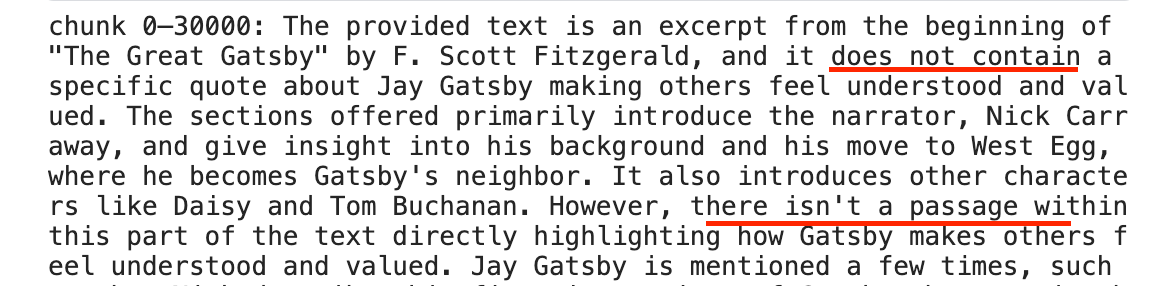

I tried going in chunks of 30k characters at a time. This works better, in the sense that GPT-4 is now processing the text and responding to our request to look for the quote!

But we now have a new issue: the “wall of text” problem. The responses from the model are very verbose, even for the case where the quote is not found. So in the end we’d still need to read a lot of text to find the one chunk in which the quote is found.

“Just say no”

Let’s see if we can fix this by tweaking the prompt. We’re going to ask the model to simply respond with “NO” if it cannot find the quote. (throwback to Nancy Reagan)

Search the text below for a quote about Jay Gatsby making others feel understood and valued.

If the quote is not there, just respond with "NO"chunk 0–30000: NO

chunk 30000–60000: NO

chunk 60000–90000: NO

chunk 90000–120000: NO

chunk 120000–150000: NO

(...)Hmm, this is disappointing. The model becomes too eager to say NO and doesn’t find the quote at all ❌.

A model managing a model

So let’s come back to our original prompt (Search the text below for a quote about Jay Gatsby making others feel understood and valued). That prompt was working, but generating responses that were too verbose.

What if we use a Large Language Model to solve a problem created by a Large Language Model? 🤔

We will first use the original prompt to look for the quote in each chunk. Then, we will ask GPT-4 to read its own response for each chunk and tell us if it looks like the quote was found. We’re now layering LLMs, Inception style!

Below you can find a response to a request to find a quote.

If the quote was found, respond with YES.

If the quote was not found, respond with NO.Putting the pieces together, we get the clean response we wanted:

✅ quote found in chunk 60000–90000: The quote you're looking for is found in the passage where the narrator, Nick Carraway, first meets Jay Gatsby and describes his smile:

"He smiled understandingly—much more than understandingly. (...) It concentrated on you with an irresistible prejudice in your favour. It understood you just so far as you wanted to be understood, believed in you as you would like to believe in yourself, and assured you that it had precisely the impression of you that, at your best, you hoped to convey."That’s it!

I love this passage, I think it captures something essential about interpersonal charisma: one that isn’t centered on oneself, but on making others feel seen and understood.

Bottom line

The idea of using a very large context window to process all of The Great Gatsby in one go seems nice, but didn’t work in practice. If anyone succeeds in making this work, please let me know how you went about it!

In the meantime, the more practical solution is to chunk the large document up and process it one fragment at a time. When we go this way, the small difficulty lies in processing/collating the results (which we handled using an LLM to process its own earlier output).

More on this

📖 The Great Gatsby is a great book for humans to read too!

🎧 When “The Great Gatsby” entered public domain, the NPR podcast “Planet Money” celebrated with a special episode on intellectual property. To demonstrate the point that the book is now in public domain, the podcast staff concluded the episode by recording their reading of the book… all of it!

Postcard from Japan

Last photo from Japan – the Golden Pavilion in Kyoto. With the trip firmly in rear window, looking forward to spending more time in Paris this spring 💫.

Have a great week,

– Przemek

📖 The Great Gatsby is a great book for humans to read too! - this has to be one of the best lines in your blog!