The language superpower: running out of wine is not an emergency

🚑 Fine-tuning a language model to distinguish real emergencies from tantrums and comedic exaggerations.

Language is our superpower.

Try searching for “Major disaster” on a social media website and marvel how easily you can tell apart different meanings of the phrase:

“Major disaster. I have run out of white wine 😳😩” → comedic exaggeration

“Imagine going back to doing marketing without ChatGPT… a major disaster for the world as we know it.” → hyperbolic metaphor (or so we hope)

“400mm rainfall in 48 hours. This is going to be a major disaster for North Andhra, stay away from water streams” → natural disaster

If we can tell these apart at a quick glance, it’s because human mind is great at language 💫. It can integrate all the nuances of context and vocabulary and make quick calls that only feel effortless because they are unconscious.

Disaster Tweets

In the Kaggle Disaster Tweets competition we need to replicate this superpower in a computer system. The challenge is to build a system that can recognize which of the given tweets are about real disasters, and which are not.

We’re given a training set of 11k tweets that were already classified. The training examples of tweets that are not about real disasters include:

“Me trying to look cute when crush is passing by”

“Bloody Mary in the sink. Beet juice”

“I know where to go when the zombies take over!!”

The “real disaster" examples include:

“Fatal crash reported on Johns Island”

“Bloody hell it's already been upgraded to 'rioting'“

“And so it begins.. day one of the snow apocalypse”

How would we write a computer program that could tell these categories apart?

Language superpowers

These days we can just ask an AI chatbot like ChatGPT what it thinks about the given tweets. But we need a solution that works at scale (there are 11k tweets from the evaluation data set). It’s also more instructive to build the system ourselves 💪.

While the launch of ChatGPT in the fall of 2022 was the breakthrough moment for public awareness of large language models, the technological advancements that enabled all this language-processing magic came gradually over at least 5 years.

To emphasize this, let’s solve the Kaggle Disaster Tweets challenge using the DeBERTa v3 language model, released by Microsoft in 2021 💫.

Things people do to neural networks…

To solve the challenge, we’re going to “fine-tune” the existing pre-trained language model to classify the tweets for us.

“Fine-tuning” is a very fun concept. We will dive deeper into it in another edition, but the basic idea is this:

📚 take an existing language model that already learned a lot of core concepts of human language

✂️ cut off the last layer of its neural network which is responsible for the final output of the model operation (that’s brutal!) and …

⭐️ replace it with a new layer that’s trained specifically on the task we’re trying to accomplish.

For an imperfect but fun metaphor, imagine taking a Ferrari engine and putting it into a lawn mower. You get all of the power of the ferrari engine, but it’s being used in a new context :).

Let’s mow that lawn!

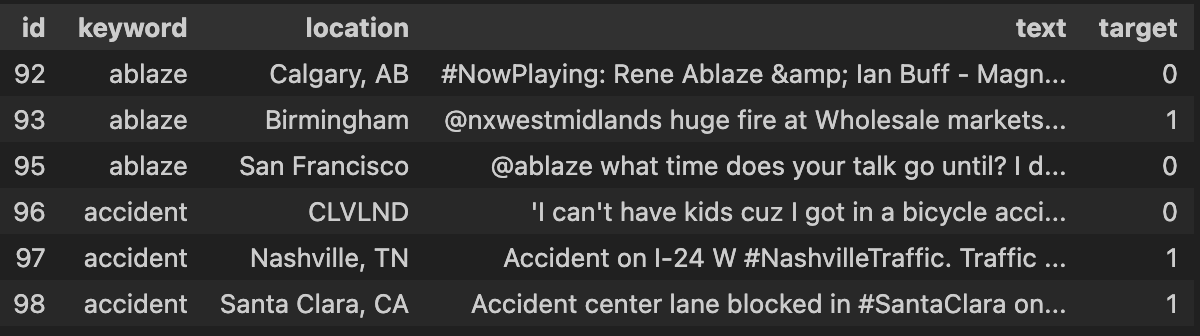

Each tweet in the training set comes with a keyword (a term related to disaster terminology that appears in the tweet), a location from which it was posted and the text. We also get the “Target” column, which indicates whether the tweet refers to a “real disaster”.

We need to process this data, so that it’s in a format appropriate for fine-tuning. For each tweet in the training set, we’re going to pass two pieces of information to the model:

Tweet data combined into one string. I went for a format that looks like this:

'KEYWORD: ablaze; LOCATION: Birmingham; TEXT: nxwestmidlands huge fire at Wholesale markets ablaze' (The format doesn’t matter that much. That’s the cool thing about language models, they work with language and don’t care about formatting details.)

The “target” value, ie. whether the tweet is about a “real disaster”. This is what we’re trying to teach the model to predict.

The fine-tuning takes 11 minutes on my laptop (after spending 3 hours trying to overcome unhelpful errors, but that’s another story):

Fine-tuning is the main computation-intensive part. After that, making predictions on the evaluation data is much faster 🚀.

Evaluation

Let’s see how DeBERTa v3 did on the problem!

0.81 accuracy, not bad! This gives us the scoreboard position of 301 out of 1080:

There are a couple of tweaks we could try to improve the score:

try different models, including some of the latest state-of-the-art open models such as Mistral and Llama 2

clean the training data set. It contains a lot of mislabeled examples, e.g. this one is marked as “real disaster” 🙃: Didnt expect Drag Me Down to be the first song Pandora played OMFG I SCREAMED SO LOUD My coworker is scared

try being smarter than the language model by adding handwritten heuristics

The full code of the solution presented so far is in this Kaggle notebook, you can use it as a base for further experiments.

Bottom line

In a Promethean feat of technological progress, the computers have stolen what used to be a uniquely human super power of understanding the nuances of language. The good news is that it allows us to build capable systems that can work with language at scale, like the tweet classifier we just constructed.

To build such a system we didn’t need to make our own big language model from scratch. That’s good because it would be very slow and very costly. Instead, in less than 50 lines of Python code we can take a pre-trained model and fine-tune it to do precisely what we want.

More on the magic that makes this possible in a future edition 💫 !

In other news

🤖 Speculations run wild about a potential AI breakthrough at OpenAI. Yann LeCun seems pretty sure about what this is about.

📰 Interesting profile of Ilya Sutskever, OpenAI chief scientists and one of the people at the center of the recent events

🙏 It’s the Thanksgiving week in the US. I like this thought exercise from moretothat on how to be more thankful: if you were to lose everything you have in your life (friendships, current health, etc.), how bad would you want it back?

Postcard from Paris

After a few weeks of rain the weather got colder and drier in Paris, we even get to see the blue sky every now and then.

Stay warm 💫,

– Przemek

It would be hyperbolic to assume that computers can outdo human understanding of nuances in language. While you have stated how to train it better, we humans ourselves have not mastered it, as often there might be conjecture, interpretation, and regionalisation where the same sentence could have multiple definitions. This renders the meaning of the same situation to have two conclusions. The simplest example I can think of is the questions "How are you?" and "I am fine"... but are you?