Think before: chain-of-thought prompting for creative brainstorming

🧩 Putting a viral reel to test; creative brainstorming vs math puzzles; LLMs need tokens to "think"

I’ve been adding this sentence to all my ChatGPT prompts and I get better output every single time → says Justin in a viral Instagram reel, before introducing a variant of what’s called “chain of thought prompting”.

Let’s see what this very popular prompting technique is, how it works in practice, and try to get an intuitive sense of why it (sometimes) works.

What is it

“Chain of thought prompting” is a way of formulating questions we ask of language models. In this technique, we ask the model to write out its reasoning before producing the final answer.

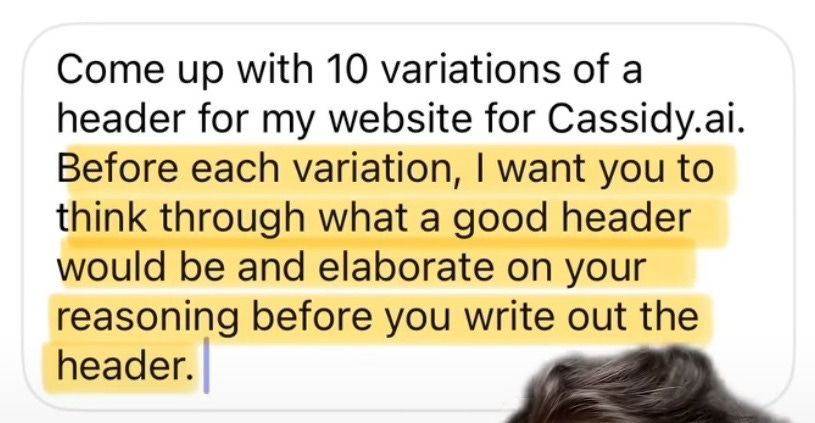

In the Instagram reel, the author uses this formula:

Come up with 10 variations of (something you want done). Before each variation, I want you to think through what a good result would be and elaborate on your reasoning before you write out the answer.

Let's try it: creative brainstorming

I asked Claude (LLM-based Chatbot from Anthropic) to help generate subtitle ideas for this post.

I compared two prompts. Classic looks like this:

Here's a newsletter post I drafted. Can you come up with a good subtitle that summarizes its content and encourages the reader to read it? Try to squeeze at least 3 different points/ideas from the post in the subtitle and separate them with semicolons.

Please give me 3 options.

"""

(post content, pasted using the technique we showed last week)

"""

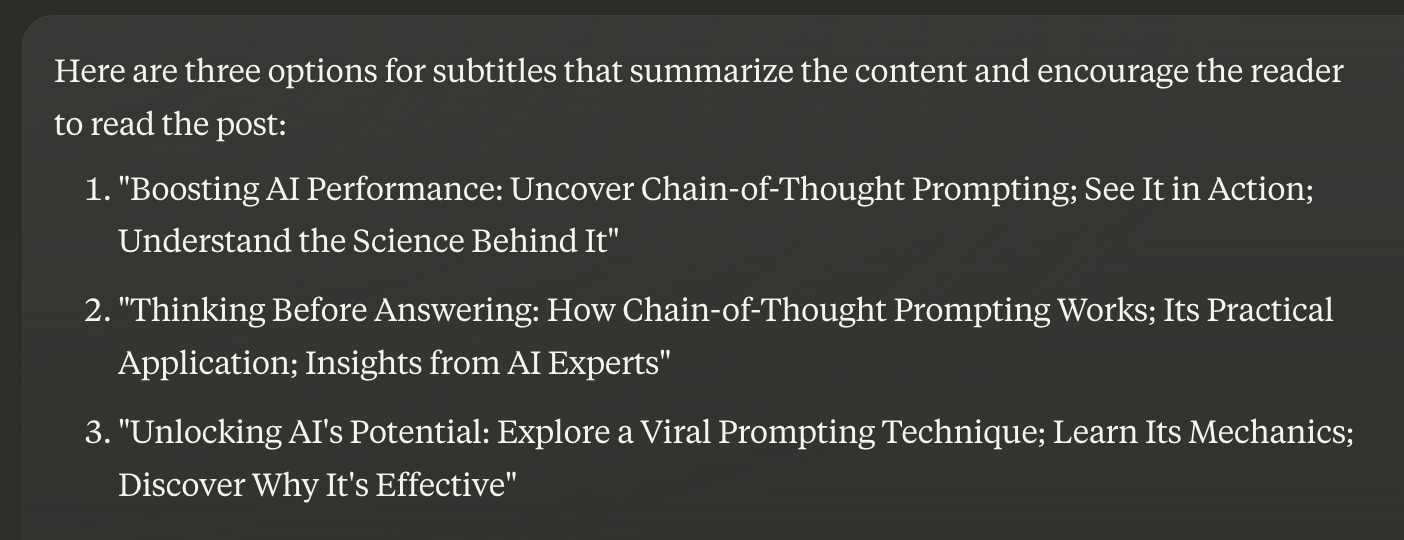

Claude generates the subtitle ideas as requested:

The chain-of-thought variant included an extra mention:

Before listing the options, please first list the key points and notable quotes from the post that the subheader should summarize.

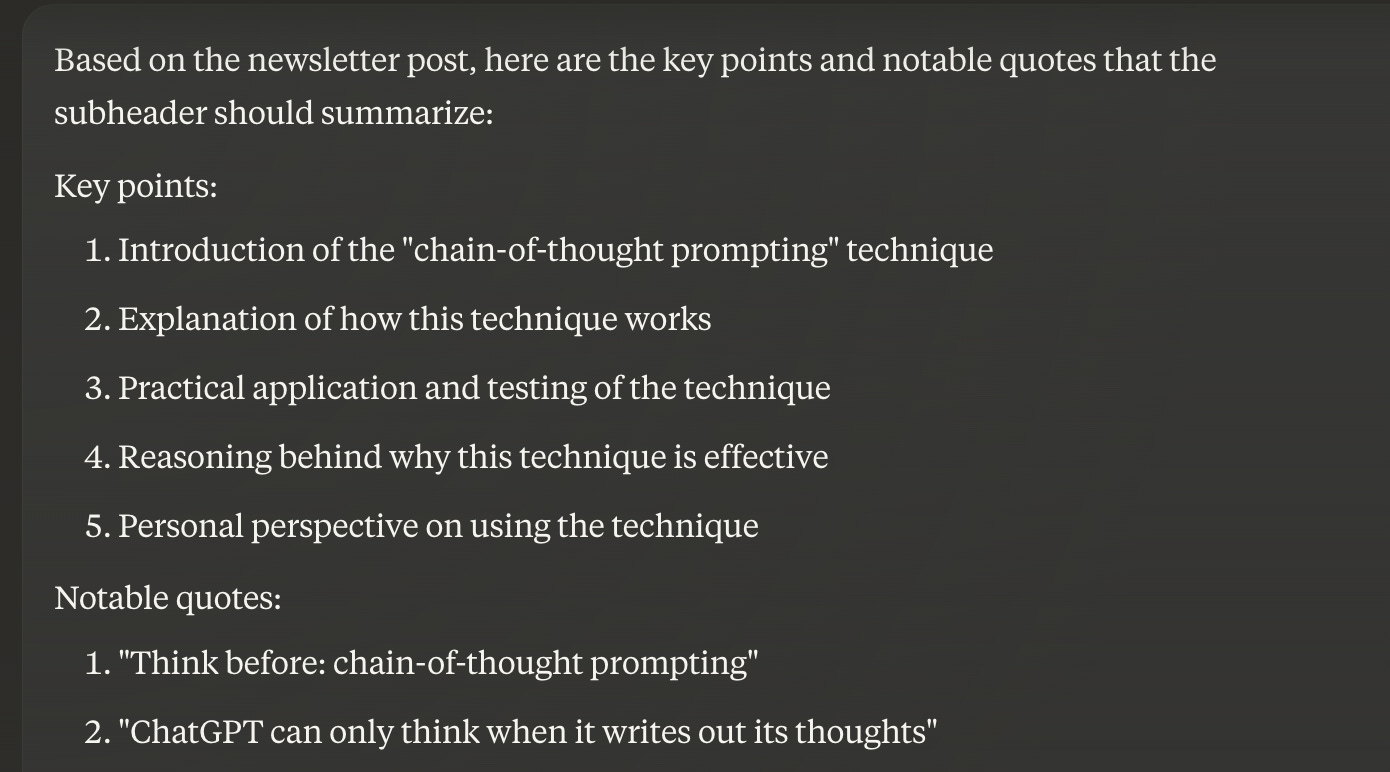

, so that Claude first summarizes the main points of the post:

and only then tries to compact them further into the subtitle ideas.

Does it work better? I find the results equally mediocre :)

Neither version conforms with the format I asked for: I wanted 3 different points or quotes separated by semicolons, instead it always starts with an opening fragment, a colon, and only after that it lists the three selected points

The answers are very generic, e.g. it says “Its practical application”, or “The science behind its effectiveness”, instead of directly using the actual point we’re making (see the real subtitle I wrote myself: 🧩 Putting a viral reel to test; creative brainstorming vs math puzzles; LLMs need tokens to "think")

In my experimentation (with Claude, ChatGPT and other chatbots) I cannot reproduce the claim from the viral video: chain of thought doesn't seem to help much creative use-case brainstorming.

Where it does work: puzzles

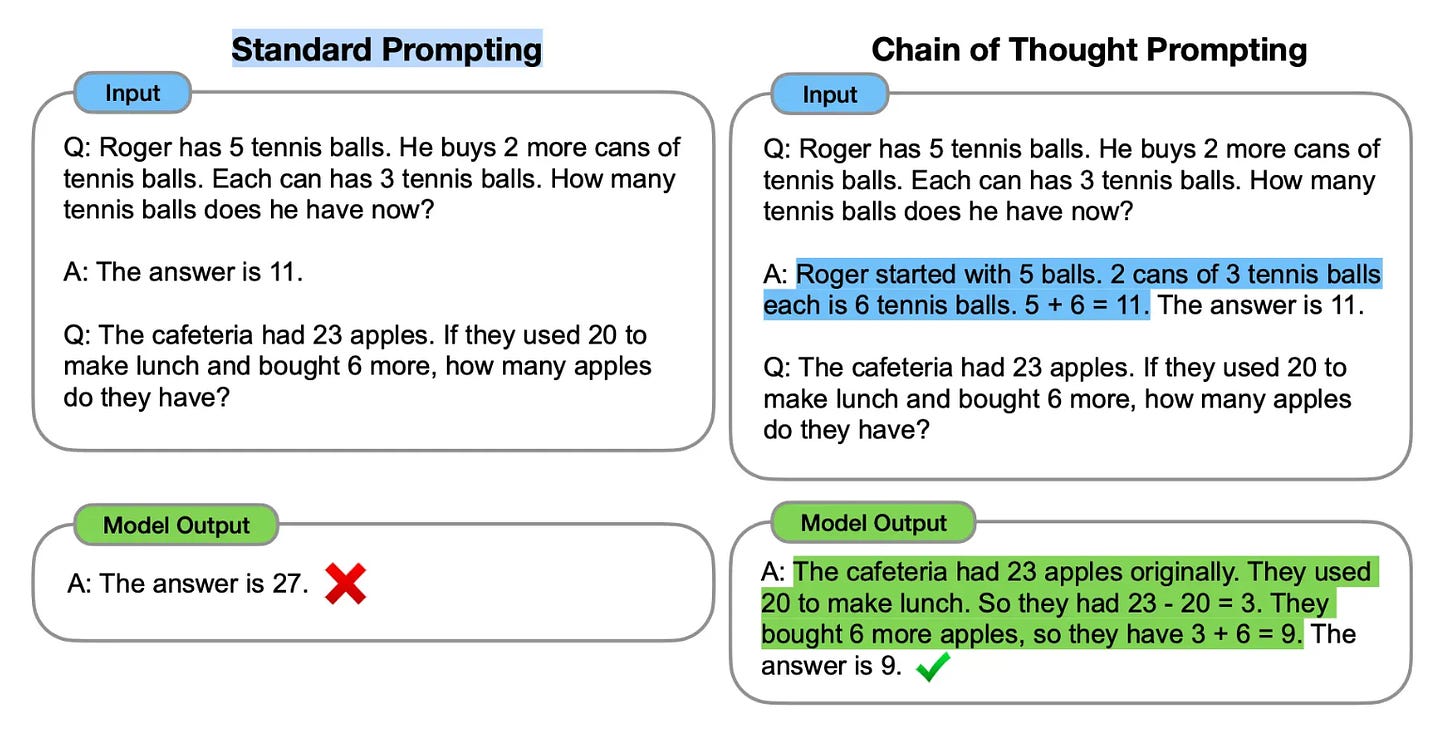

However, there's a well documented body of examples of chain-of-thought improving the results on math questions and puzzles: (source)

The only caveat is: how often do we use LLMs to solve math puzzles?

Why does it (sometimes) work

Language models produce their output one token (word fragment) at a time. The amount of compute done to predict each token is bounded: there’s no way for the model to realise that it’s solving a particularly hard problem and dedicate more compute to it.

Each time a token is produced, it's computed based on everything that came before. That includes all the output tokens that were produced before! This means that the reasoning that the model writes out becomes intermediate computation, that is then used to produce the final answer.

Andrej Karpathy offers a crisp summary : You can't give a transformer a complicated question and expect to get the right answer in a single token. These transformers need tokens to “think” (source).

Conclusion

Chain-of-thought is an cool technique, but in my experience it's useful mostly for math puzzles. When using LLMs for creative brainstorming or reviewing newsletter drafts, I ask my questions directly and seem to be getting equally good results.

I do find chain-of-thought useful when working with my own, human mind: when journaling on paper, writing down my thoughts helps me feel more confident in the conclusions I eventually reach 💫.

Credits

Thank you Alexander for sending me the reel that motivated this post!

In other news

❄️ Economic view on AI: is the winter coming? Yes according to Latent Space and Gary Marcus: Users have lost faith, clients have lost faith, VC’s have lost faith.

🎥 Engineering view on AI: yes LLMs are not perfect, and often incorrect. But it does not mean that they are not useful. They allow us to build things we wouldn't build otherwise. See the PyCon keynote on the state of AI by Simon Willison. (and an earlier pnote post on this)

Postcard from Paris

I got to watch a few Olympic tennis matches at the Roland Garros courts in Paris. Kudos to the brave players who played 2h+ games in the scorching heat. (Having watched Challengers before made this even more fun.)

Now heading for a week of vacation at an improv retreat. Will report back when I re-emerge :).

Have a great week,

– Przemek